Details

Click here to watch

the dev log series!

Gameplay Programmer

Technical Designer

Design Co-Lead

My Contributions

Need to know more?

Pick a topic to deep dive on

Pick up and play

To control the dragon the player must pick up the Dragon Guide. The dragon will attempt to match its own orientation to that of the Dragon Guide; point up to go up, point down to go down, turn around in place to make the dragon turn around. This approach is intuitive for new players, the hardest part being getting them to pick it up in the first place. Once in the hand, players near-instantly figure out how it works.

The dragon's rotation is not instant. It uses capped interpolation to maintain a smooth experience, and the rotation rate slows down at higher speed to provide gameplay texture and maintain the comfort of the rider in VR. The dot product between the dragon's desired angle and the current angle is used to drive the haptic response, letting the player feel the start and stop of their turns.

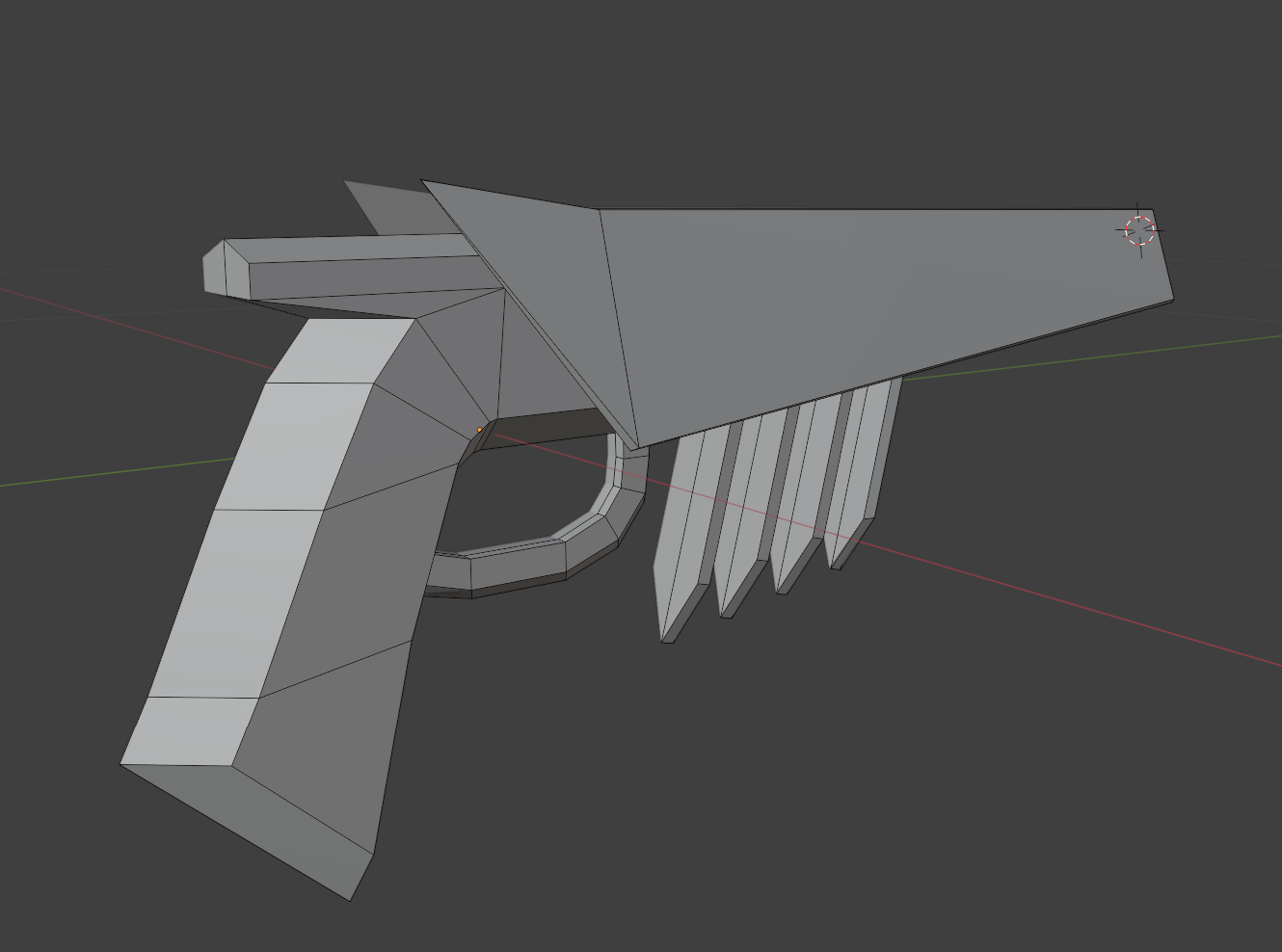

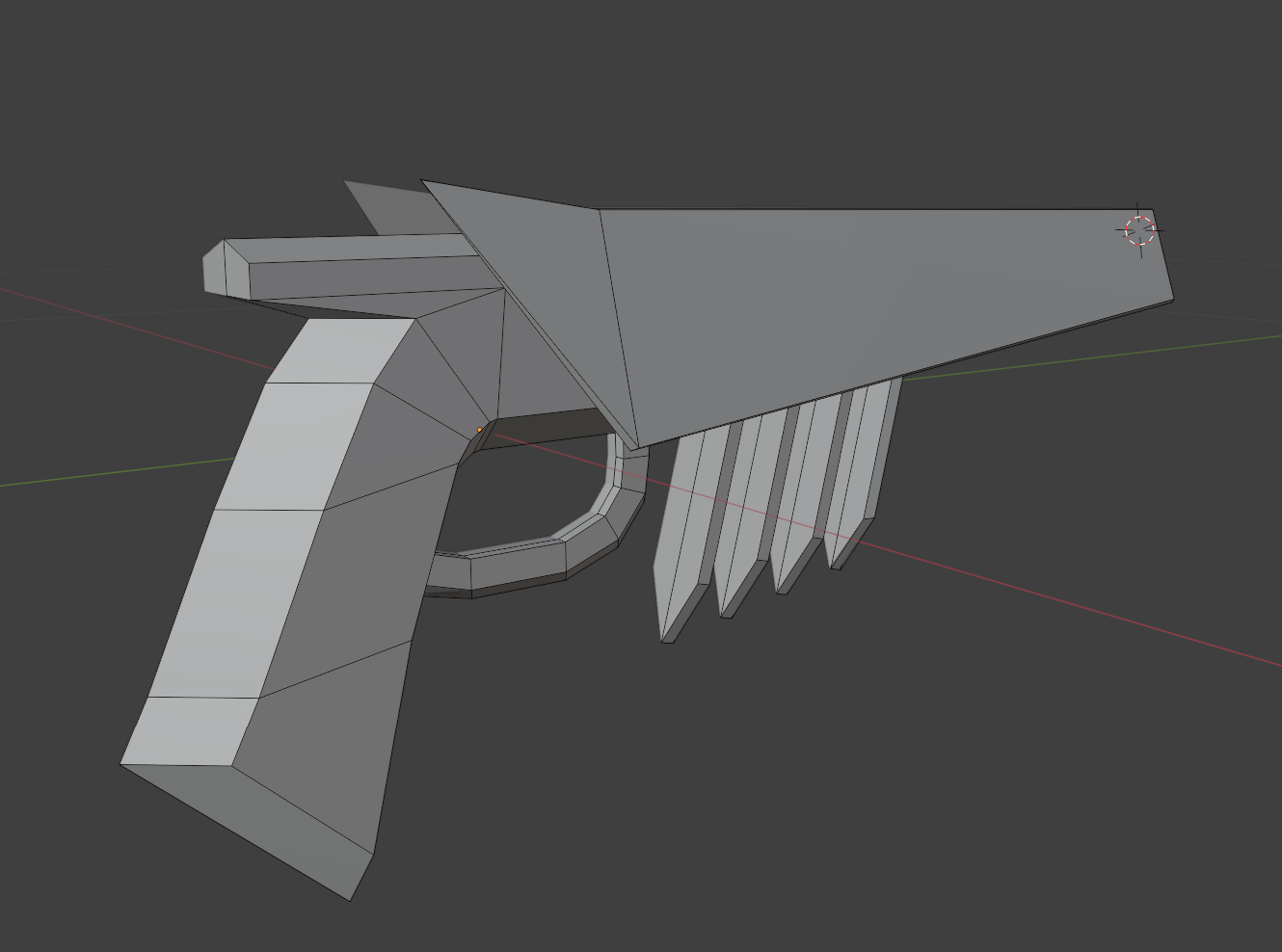

The Dragon Guide is shaped with a handle to provide the affordance of a holdable item, but lacks the trigger or barrel of a gun. The eyes and horns signify its connection to the dragon, picking it up to feel the haptic pulse, and seeing the dragon change orientation provide feedback

Ambidexterous acceleration

Managing and teaching button inputs is a persistent challenge in VR games, especially face buttons. Remembering which buttons or sticks on which hand is responsible for which action puts a high cognitive load on the player and will often require the option to rebind for left/right handedness or accessibility needs.

While rotating the dragon is elegantly handled by the controller's rotation, acceleration provided its own challenge. The grip button was already used to hold the Dragon Guide, the trigger was already used to trigger fire breath, and holding down the face buttons was unintuitive and unergonomic on some of the platforms we aimed to support. This left the stick to control the dragon's acceleration. Pushing a stick forward to accelerate and pulling back to decelerate is an intuitive enough action even to non gamers, but there were still some aspects to consider.

At least one stick would be needed for virtually reorienting the player in space. I designed it so the purpose of the stick dynamically shifts. When not holding the Dragon Guide, tilting the sticks to the sides will reorient the player, but holding the Dragon Guide overrides this function in that hand and instead controls the dragon's acceleration. This maintains the connection between the hand and the item held within it, regardless of which hand the player chooses to use.

The dragon accelerates, turns, and brakes effortlessly and intuitively at the player's command

Modular, customizable, and extensible

When implementing the dragon, I split it up into several separate objects/components all intended to talk to one another. The dragon controller script accepts desired rotation and events to trigger acceleration but does not process any player input directly. This allowed the AI-controlled dragon to use the exact same code as the player-controlled dragon, simply using a script to emulate the same inputs that the Dragon Guide feeds to the player. The enemy dragon rider has different needs for its behavior not just in the complex behavior trees to run the combat, but it also has less of a need to feel smooth because the player is not in control of it. The player's dragon can use a much simpler finite state machine AI to handle responding to player input, enforcing boundaries, and avoiding obstacles when the player isn't in the saddle. Both of these vastly different use cases are all supported cleanly by the same script due to proper compartmentalization and composition.

Features like rotation constraints, obstacle avoidance, and even the animation curves that determine the speed gained and lost from gravity can be tweaked by the designer to iterate on the dragon's handling and tailor it to the specific use case.

This screenshot shows some of the many parameters exposed to designers in the Dragon Movement controller to tweak how flying works and feels

From first concept

From the earliest version of this game dating back to my high school note book in 2018, fights with other dragon riders have always been a core part of the expirience I wanted the player to have. I have always felt an affinity for that trope in games, the boss fight that has a similar skill set to the player. The ability to fight other dragon riders with guns was a core hook used to market the game, both to attract talent to join the team and to explain the project succinctly in an elevator pitch.

A great emulation target for this was the Apex Predators from the Titanfall 2 campaign. While designing a full rogues gallery of varied dragon riders all with their own identities and skill sets would have been amazing, unfortunately it was beyond the scope of the project.

Instead we chose to focus on one single encounter, a vertical slice of what players could expect if the game was a fully featured commercial product and not merely a student project.

An original scan from my high school journal depicting a first-person dogfight with another dragon rider

Managing state and behavior

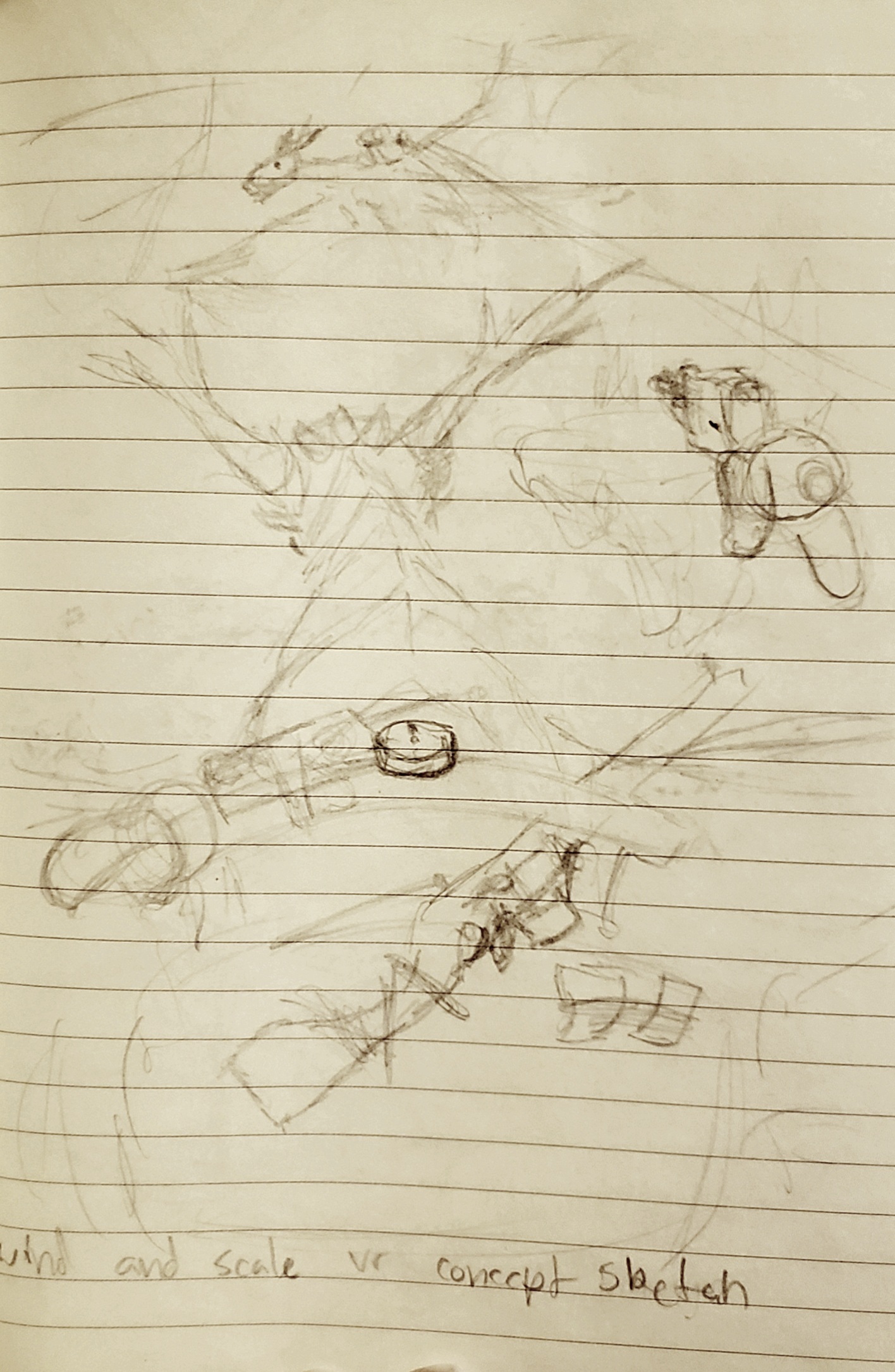

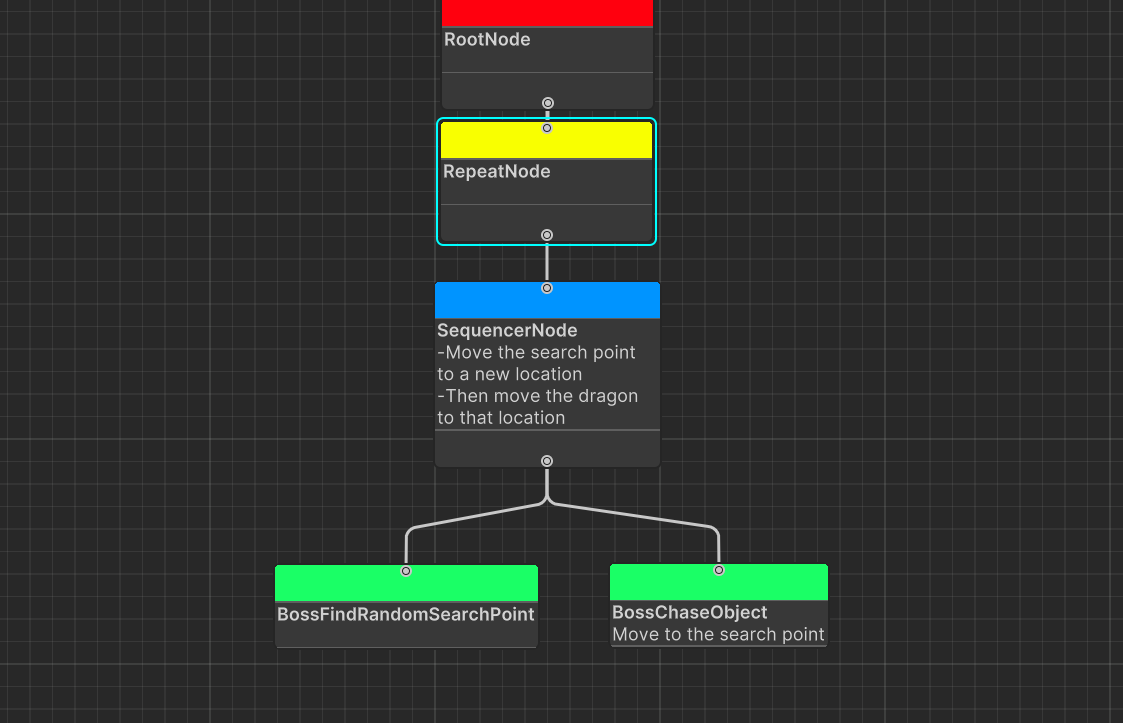

Behavior trees are one of the best approaches for handling complex enemy behavior in games. Our team invested in creating our own behavior tree implementation for Breath of the Sky. Behavior trees are more flexible than finite state machines and easier to iterate with, however they are not as good at managing state. I simply was not able to create a behaviour tree robust enough to handle the varied behavior needed for the boss fight while also being responsive enough to the player's actions.

The elegant and simple solution that I came up with was inspired by what I learned in professor Steve Rabin's AI Programming class. The boss actually has multiple separate behavior trees that share one blackboard and can be toggled between in a finite state machine. The trees themselves handle the moment to moment decisions on how to search for, attack, and flee from the player, while a top level script manages which tree is being applied.

Actions like returning the boss to the play area, responding immediately to damage, or losing the player when they go out of vision needed the ability to interrupt the current tree and put the boss into a new state. To prevent further issues, I extended our behavior tree implementation to add a recursive "clean up" function that would be called when a state transition happened and a node had to be aborted in progress.

A side-by-side comparison of two of the behavior trees the boss uses; one is very simple, the other is highly complex

Finding the fun:

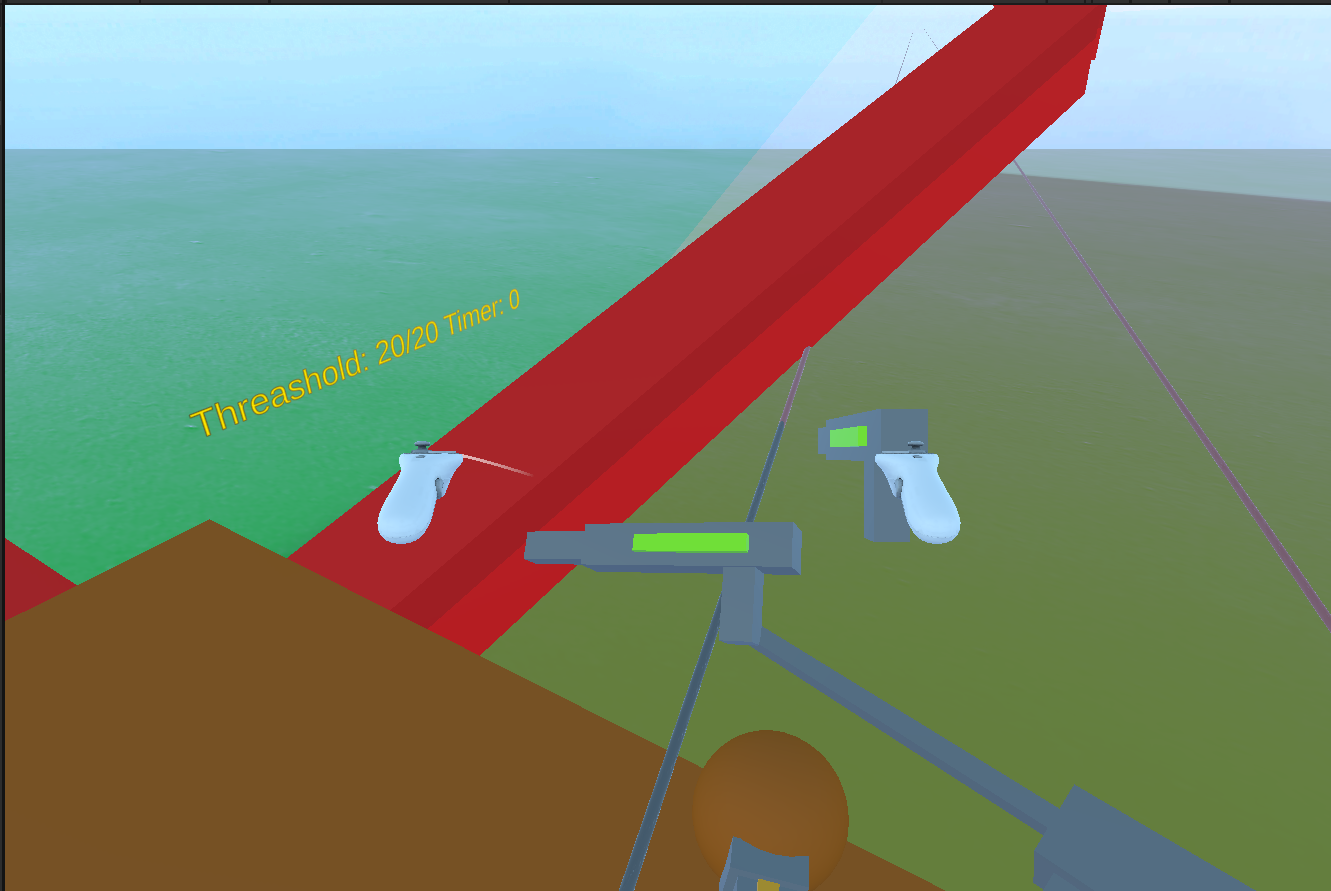

When designing this game, we set out to make a game that could present a challenge for skilled players but that would still be fun for new players, especially to players unfamiliar with VR games. This required constant and thorough playtesting to double check every design decision. In our testing of the boss fight, we encountered a difficult design challenge to solve. Players with a firm grasp of the mechanics could beat the boss in seconds, but increasing the boss's health could lead to unskilled players taking upwards of five minutes to clear the boss, and those five minutes would be full of frustration and a lack of feedback.

Looking at the notes, videos, and data collected from playtest sessions I analyzed what behaviors differentiated a strong player from a weak player. Strong players often could locate the boss, whereas weak players were too busy managing their controls and their own flying to keep track of the boss's location. When losing track of the boss, weak players would also take damage from behind, get knocked off their dragon, and get stuck in a long loop where they could not return damage to the boss.

Addressing the issue of the players losing track of the boss required new tools for the player and new behaviors from the boss. I added a compass to the saddle that would point persistently to the boss's location; this object could later be repurposed in other levels to direct the player through the game. I also added behavior to the boss to determine if it was out of the player's view and then get in front of them before attacking. That way the player would always see where their damage was coming from. Lastly, I added a silent decay factor to the boss's health. The boss starts with a massive health pool, but upon taking damage that number slowly ticks down to a much lower value. An aggressive player with good aim and good situational awareness cannot kill the boss in one good strafing run, but a player who can only score occasional hits while struggling to fly their dragon isn't forced to chip away at an insurmountable wall of health points. None of the play testers were ever informed about this system, and by the final build both strong and weak players alike reported that boss fight provided a satisfying challenge and did not last too long.

A highly-skilled playtester giving some last minute feedback on a build near the end of development

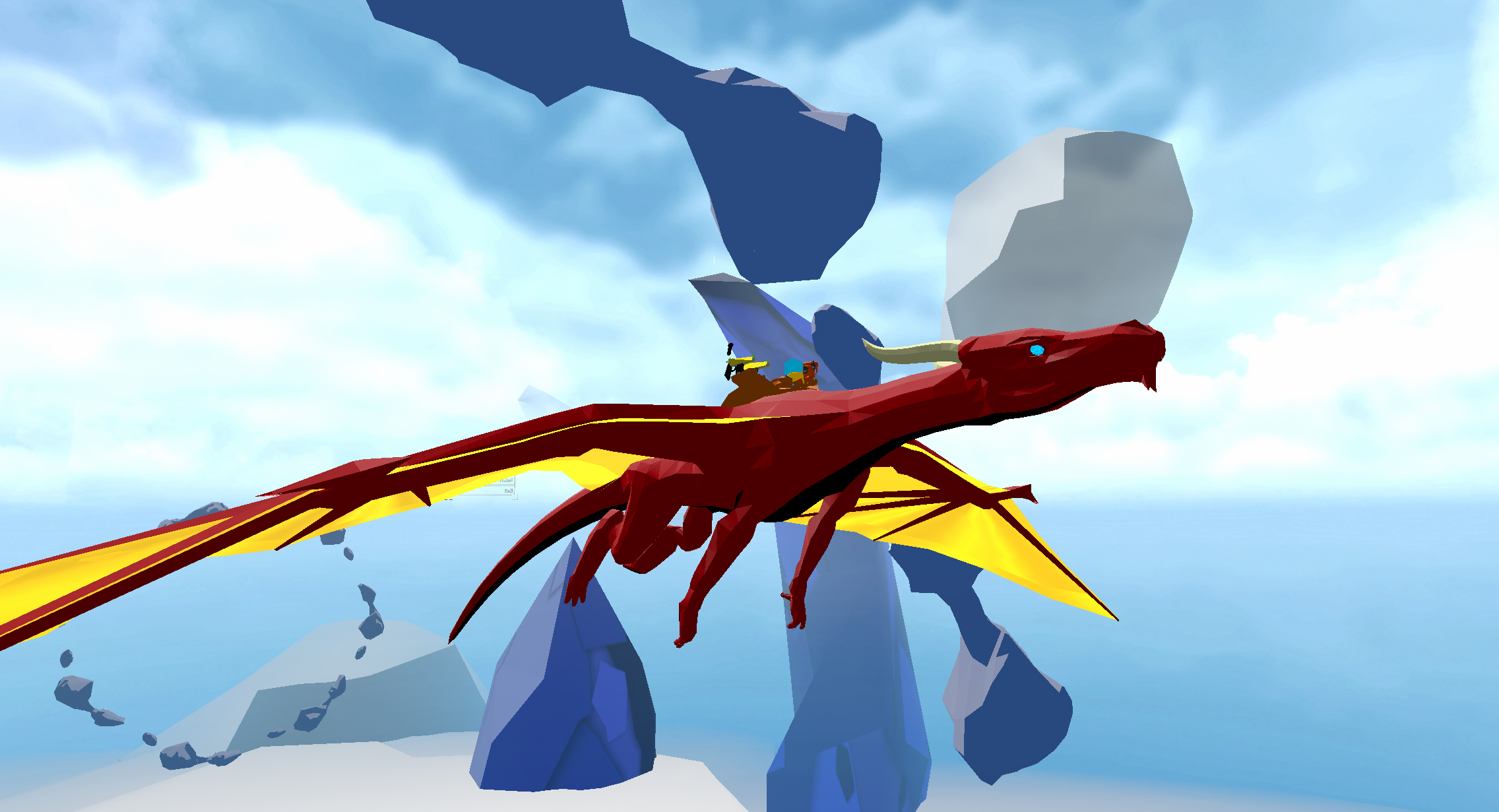

Breath of the Sky is a VR Action game setting out to be the definitive dragon rider experience. In it you have complete control over your dragon, as well as an arsenal of weapons and grappling hooks to battle enemies in intense aerial combat. With a team of five programmers (including myself), we had roughly five months to produce the best version of this concept that we could.

Team Dragonfall:

When designing the game, our core pillers were:

FLOW

Gameplay mechanics should be kept simple but highly rewarding to keep the player in a flow state. Controls should be simple and intuitive so the player can be fully immersed in the fantasy. The player should not be reliant on reading text or UI elements to understand the game state.

SPEED

Everything happens at a rapid pace. The player moves quickly, as do enemies. The player should not be forced to wait around.

FANTASY

The player does not watch spectacular things, but causes them. Every action is a thrill ride, and every design decision should give the player a way to feel powerful and cool. The primary goal is not to challenge the player, but to act as wish fulfillment.

The final version of the game we were able to create in the spring of 2025 serves as a vertical slice of three modes of play that we thought best represented our idea for this game:

Origin Story:

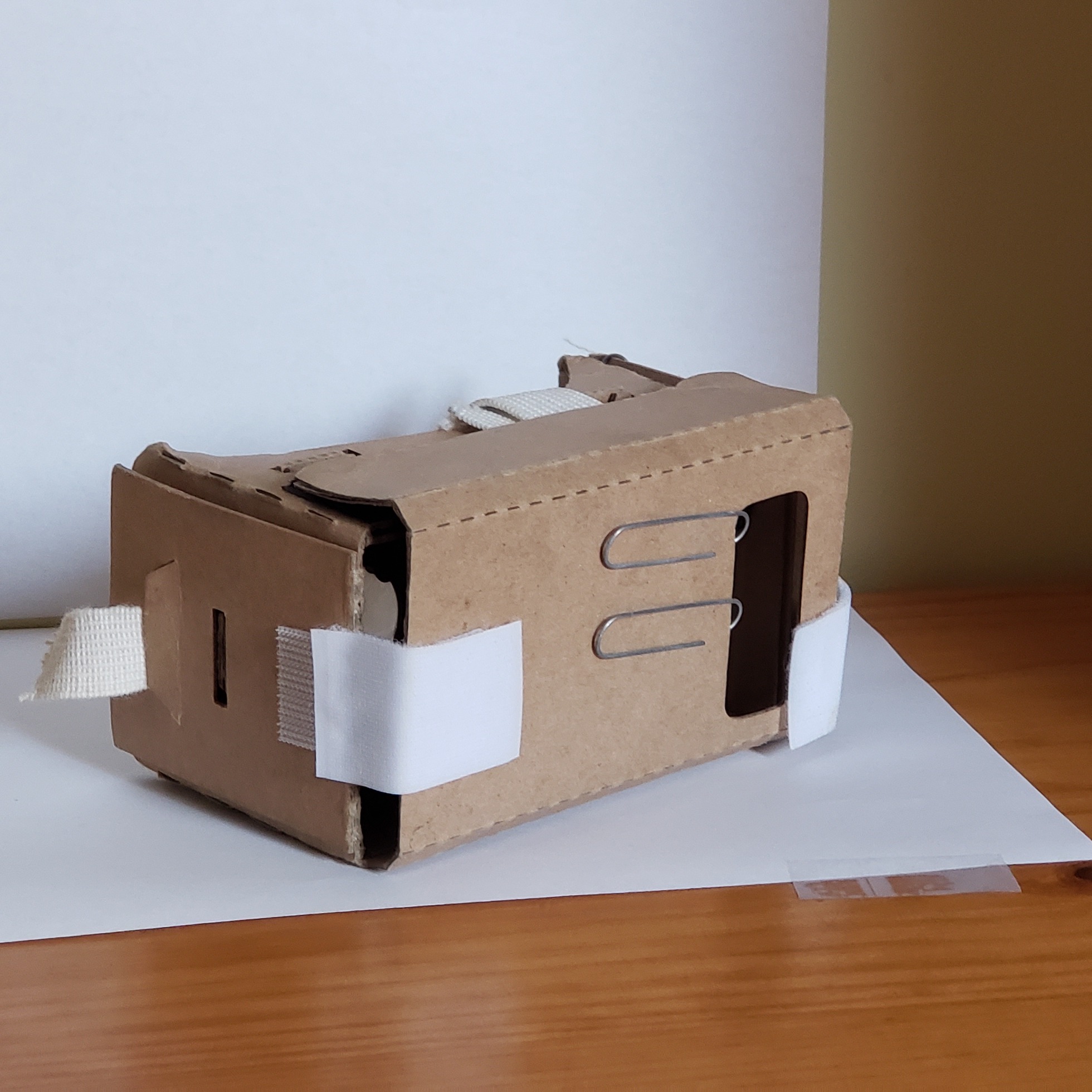

The first seeds of what would become Breath of the Sky were planted back in 2015. Oculus Rift dev kits had started to make it into the hands of my favorite YouTubers and the world of virtual reality was opened up to me. I did not have a powerful computer at the time, nor did I have a lot of money as a highschool freshmen. In researching how I could get my hands on any VR experience for something on a budget, I found the Google Cardboard. It could be bought for under twenty dollars, and accepted my Samsung Galaxy S5. A lot of the things that I wanted to play on the device, like Minecraft, it simply wasn’t capable of. This lead to me trying out the small sampling of VR titles available on the Google Play store. While a lot of them were cool and were fun to show off at school, none of them really had staying power. Throughout high school I would day dream about potential games that I could bring to the platform, working around the limitations. If no one else was going to make a good polished game for the platform, then I would have to step up to the plate and do it myself!

Early dev logs I made at the time.

In the summer of 2017 I started to finally coalesce my ideas into something more substantial. The initial idea was for a VR game about riding on a dragon. The player would use a bluetooth controller for input, since the Google Cardboard only had one button located on the headset that could not be pressed rapidly. The core gameplay would be centered around aiming the dragon’s movement with your head before pressing an “aim” button to unhook your head from the dragon’s controls to shoot guns in the direction your head was looking. I started writing up a game design document and spent senior year learning all I could about the Unity game engine and the process of exporting APKs to Android. In part of my pre-production, I looked around the internet to see if anything like what I was envisioning had been created before and stumbled upon a series of dev logs for a game called “Dragon Skies VR.” I was almost discouraged from trying all together; here was someone making something so similar to what I was intending. Ultimately I decided to stick with it, given that I intended to take my game in its own direction.

The devlog for "Dragon Skies VR"

I'm sad to see that this game seems to have ceased development despite an incredibly promising initial showcase.

By the time I graduated high school in 2018, a lot had changed. Google had abandoned the Cardboard platform. Newer phones were coming out that did not support the standard, and with PC VR maturing there was far less appetite for smartphone-based VR experiences from the market. The HTC Vive had been out for two years and opened up the possibility of room-scale experiences, prompting other companies to jump in with their own offerings to the VR Market.

I started seeing videos on YouTube explaining new, low cost headsets made by Microsoft that were competitive in features with the Vive, as well as a competent gaming laptop for less than a thousand dollars. I got both as a graduation present and decided to retarget the game from the dying Cardboard platform to PC VR.

The transition was surprisingly smooth. Rather than relying on head input, I quickly found that using hand orientation of a tracked controller worked much better. The player could now fly with one hand and aim freely with the other.

I took a gap year between high school and college to try my hand at solo development. It did not go well. Motivating myself to work on the project was difficult, and while I had friends from high school who had written in my yearbook that they would like to work on a game with me, they suffered from the same aimlessness and lack of focus as I did meaning their contributions were negligible. I was on my own, and in way over my head. Everything I’d learned about unity and programming came from googling tutorials on youtube, and I lacked the real maturity and problem solving skills to overcome small setbacks.

Eventually I went to my local state college to start getting a computer science degree, and I put the VR dragon game on hold.

My original Google Carbdoard, after extensive modification and years of use

I would later get a dell visor, though the WMR platform was equally short lived and I would need to get a Meta Quest in 2024 to continue VR development

After some big shakeups in my life, two years into the degree I decided to chase my dreams of game development and attend DigiPen Institute of technology. Making VR games was not an option for students until senior year, so I sat on my idea for three years before using the prototype I worked on all those years ago to pitch the game to potential teammates.

A fellow student, Andrew Roulst, had worked on a previous project with me where we had decided we had similar approaches to game design and would like to work on a game together in senior year. When I pitched him my idea he showed me a prototype of his own, one that was remarkably similar in concept to what I had worked on. His was a third-person game about a stoat that could use grappling hooks to attach itself to the back of giant birds; once attached, the birds controlled almost identically to how the dragon in my prototype worked. We agreed to team up, but as a fellow creative lead he wanted to bring over some of his own ideas for this game.

Andrew is a big fan of the VR game Swarm and wanted to have grappling hooks in the game. His stoat tech demo had a mechanic of moving around floating islands and grappling on to birds before they could be ridden, so we added that mechanic to our core game concept. Now the rider could get off the dragon and use grappling hooks as an alternate movement option. Our freshmen year game project course professor Justin Chambers had a quote about managing project scope and expectations:

“You’re not going to make Breath-of-the-Skyrim”

Seeing as this was an ambitious game, one that would allow us to demonstrate all the skills we’d honed over our four years at the school, we chose the title “Breath of the Sky” as a tongue-in-cheek reference to that motto. Our mechanics were not significantly similar to that of Skyrim or Breath of the Wild, but it communicated how this was our icarus moment to do something risky and ambiguous and fly close to the sun with our game design.

This substantial increase in design scope was met with a substantial increase in programmer talent. We were able to attract three other teammates to the project, each with strong programming backgrounds that would allow us to take on this much more ambitious vision.

Screenshots from Andrew's project "Skyborn"

Breath of the Sky:

Early on we did some things that were admirable, and others that would create long lasting problems and technical debt that would come back to haunt us later in the project. Each team member got their own pet feature to work on. Rex worked on turrets, Nuni worked on guns and the item system, Joon worked on Enemy AI and behavior tree tools, Andrew worked on the grappling hooks, and I worked on the dragon flight. Both Andrew and I were able to get something playable in the first week and could immediately start iterating and improving on our systems, but we left the team out to dry with a less firm set of directions. We had assumed that by simply presenting our two prototypes we had effectively done pre production, but that couldn’t have been further from the truth. While I did throw together a game design document, I was so eager to work on iterating on my feature that I did not put in the proper work to make it a useful and living document. Ideas would be thrown around at water cooler meetings and testing would show some things working and others not. Pretty soon the direction of the game diverged drastically from what was specified in the design document, and it then ceased to be a useful tool for informing the team what to work on next.

Andrew and I split the roles of producer and design lead, but that led to a lot of arguments. One sticking point was the actual structure of the game. I wanted something more linear and narrative, a vertical slice of a game that I would legitimately want to work on and play rather than the complete game itself. Andrew wanted to raise the bar on what a student project could be, and wanted to have a lot of replayability to the game even if the content was light. He had ideas for roguelike elements, as well as open-world level loading.

This led to conflicting user stories about how the game was meant to be played. I was intent on making a slightly updated sequel to my dream game, a game about riding a dragon where you could sometimes use grappling hooks. Andrew was much more passionate about the grappling hooks, and thought that the dragon would just be a way of shuttling the player between grappling hook-centered environments. Since we couldn’t resolve this difference in vision through discussion, we decided to kick the can down the road. Prototype both features and then use playtest data to figure out where the game should go. While this was a good way of managing an interpersonal conflict, in retrospect I think it was deeply detrimental to the game’s development. We were not able to achieve as much because our ability to plan was limited. We couldn’t make long term goals if we were waiting on short term prototypes to dictate where the game would go.

Despite this, the game started to come together rapidly. Within the first two months we had something that flew like a dragon (even if it was just made of Unity shapes), and we had some grappling hooks. By a miracle, the two actually complemented each other pretty well, but there was still tension.

The school only provided us one Valve Index headset to use, and since all development was done on gaming laptops, it meant the process of actually getting it running was a lengthy and difficult one. None of our laptops had a full size display port, meaning that we needed to order adaptors, and not all adaptors are made equally. In addition, our team space was not set up near any walls to mount light houses to, meaning we had to set them up in unideal places around the room that often needed to be cleaned up after use. This added a substantial amount of friction to the development process. I had my own HMD to bring from home, but only my computer was set up to use it, and the other one was so time consuming to set up that it was rarely used for more than proctoring playtests.

This required Andrew to devote a significant amount of time to developing a competent system for simulating the VR game on a flat screen. It was useful, and sped up development in some ways, but also obscured certain issues with the minor discrepancies between simulated VR and real VR.

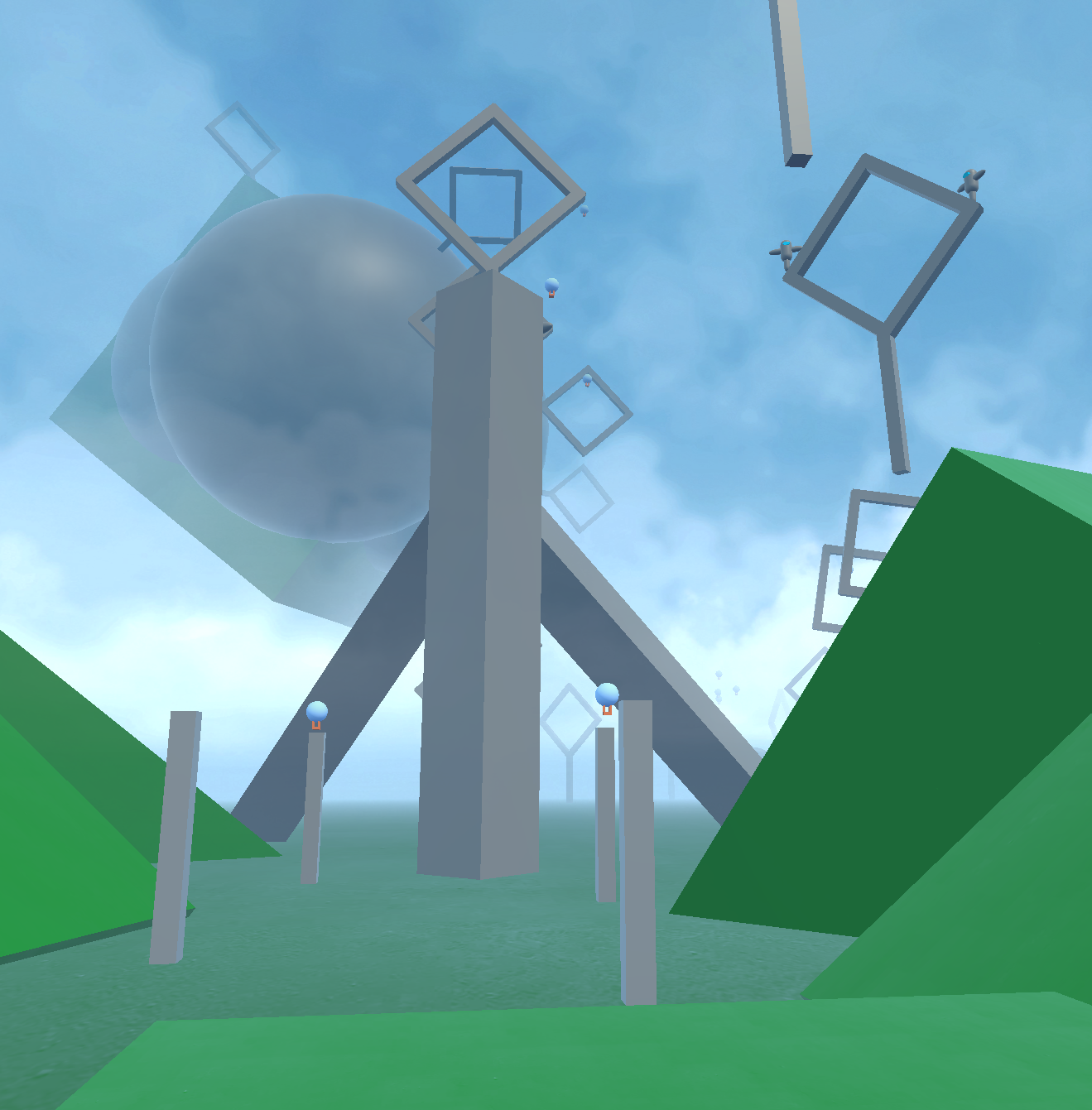

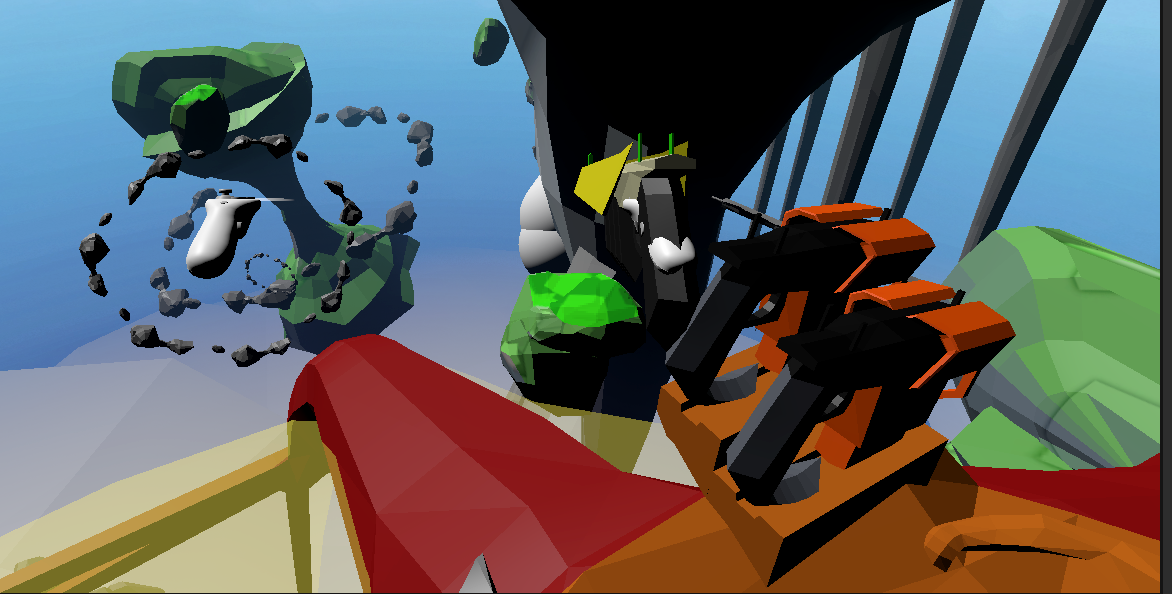

Early footage from the first playable prototype, lovably described by the team as "The Tofu Build" because the default unity cubes looked like floating blocks of Tofu

There were also conflicting user stories on a more fundamental interface level, something that we should have planned out from the start. Using Swarm as an emulation target, Andrew was passionate about the player being able to dual wield guns while grappling. Swarm is a game where the players' hands are guns, and they cannot be unequipped in the middle of a session; it’s far more “arcade-y” in that sense. I was passionate about the player switching weapons on the saddle by picking them up, treating the weapons more as physical items that could be interacted with tangibly. This led to clashes around the grip input, as “pick up” and “extend grappling hook” were sharing the same input, and our code had to tie itself in knots to accommodate this contradiction. If I could travel back in time and do it over again, I would make sure this is something the whole team was in agreement about from the start. Our approach of “just build both and then playtest to see what’s better” failed horribly when presented with this conundrum. Nuni, who was in charge of working on the item/weapon system was constantly getting conflicting notes from myself and Andrew about what we needed and how the game should function (and the design document was out of date, so he couldn’t just rely on that). This slowed down his progress running fools errands, and that cascaded through the rest of the project.

While the early stages of the game were chaotic, I still remember them fondly. As messy and convoluted as things got, there was still a shining light: playtesters loved it. Even when the game was a skeletal collection of half baked scripts and Unity primitives, players were enjoying themselves. Flying on the dragon was fun! Swinging from the grappling hook was fun! There were flashes of inspiration, like when a playtester pulled the eject switch on the dragon, then mimed shooting at enemies behind him (that weren’t implemented yet), then snapped back to use his grappling hook to bring him back to the saddle. We were seeing the beginnings of a gameplay loop forming, one that got both us and playtesters excited. Even as there was mounting technical debt and design disagreements, we kept those enthusiastic reactions from playtesters as our north star of where we wanted the game to end up.

Around November, DigiPen held its once per semester “LAN Party” event, where student game teams open up their work space to have the student body play the games to offer feedback and data. Since a full version of the game was not playable yet, I constructed three zones to get feedback on different parts of the game. The players would be dropped into one of our general test environments to familiarize themselves with the controls and for me to handle calibration; this would provide us with general feedback on the interface and players’ comfort in VR. Next, they would be sent to a “dragon playground” where they would be tasked to fly the dragon through rings Star Fox-style while shooting at static targets and competing against other players’ time trials to win a prize for the event. Lastly, they’d be sent to a “grapple playground” where there was no dragon and they would instead race to find a blue cube at the far end of the environment. From this event we learned a lot of valuable things. Players were struggling to hit targets while moving on the dragon due to a critical bug in our projectile code, and some players struggled to go to exact places when using the grappling hooks. What we didn’t learn was which mode of play was more desired. Some players gravitated towards the dragon, some gravitated towards the hook, and we couldn’t find a clear answer on which should be the primary mode of play.

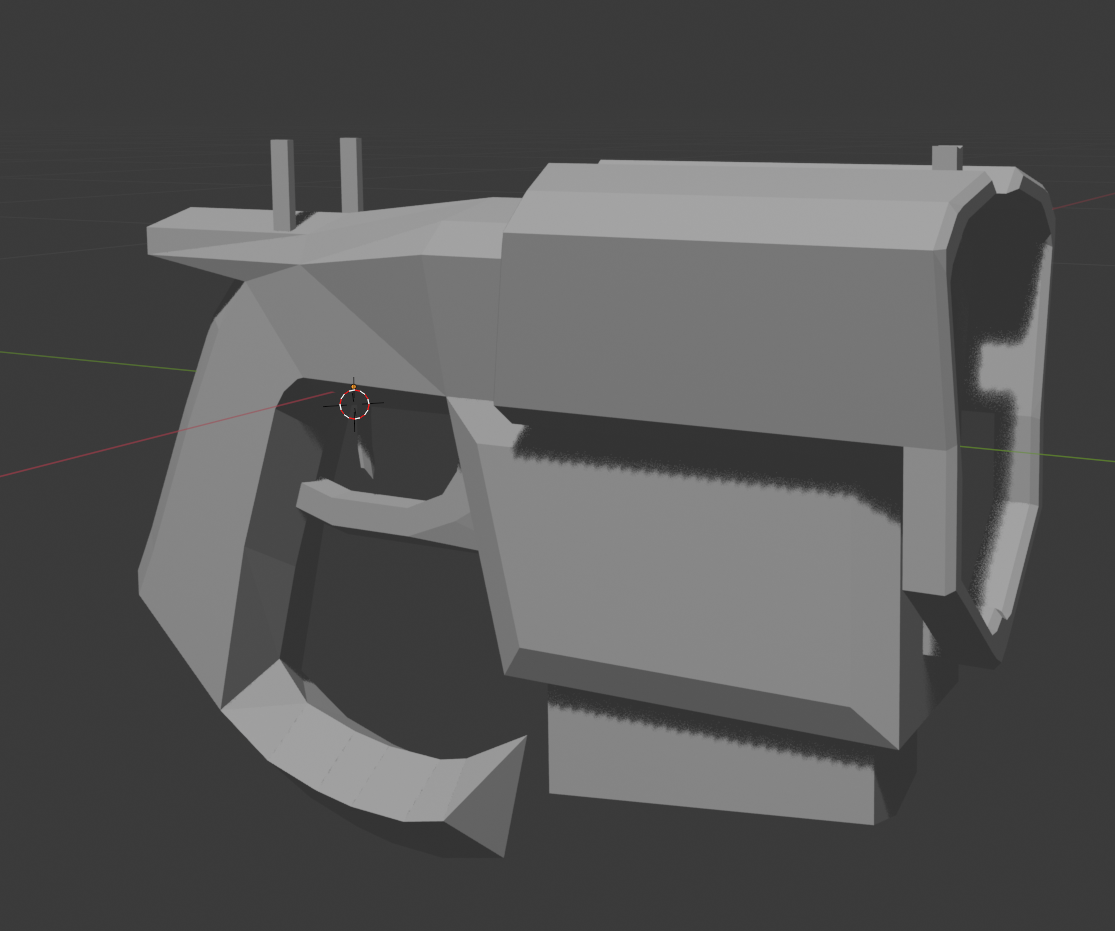

The game was still finding its identity, but playtest feedback helped us narrow our focus. Early on, we experimented with a lot of half-finished features that the team was passionate about. Nuni really wanted to try making two handed rifles work and added a sniper rifle to test out. Andrew made multiple prototypes including a tool that would allow the player to make their own grapple points, and a floating serpent combatant. Together with Rex, I was passionate about making flying fortress airships for the player to fight against. Each of these fell apart in their own unique ways. The experimental weapons were confusing and not well-received by playtesters, and the experimental combatants would require art assets that we didn’t have the bandwidth to create. In playtests we asked, “What element of the dragon rider fantasy do you think this game was missing?” and used that feedback to refine our focus on what features needed to make it into the game.

The "dragon playground" didn't end up being representative of the final game's art style or level design, but it still gave us a good place to test out players' ability to navigate around obstacles and aim at targets while moving

Towards the end of the year, more systems started to come online and the game approached a state we could describe as “playable.” Enemy AI came online, the projectile code was fixed up, and both the grappling and dragon flight mechanics were approaching a finished state, allowing Andrew and I to move on to other features. I began working on the dragon rider boss fight… and that’s when it happened. Disaster struck in the final three weeks of the semester.

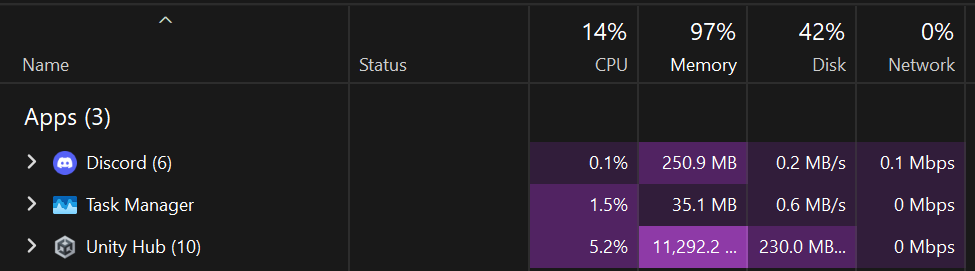

My laptop started blue screening when I tried to play the game. It was consistent, fatal, and we could not find the cause. RAM usage would climb, even in the editor when the game wasn’t running, until about ten minutes after starting the project file, the computer would run out of physical memory, start paging, and then page fault as my worn out six year old SSD couldn’t take it anymore. We had a memory leak, a critical one, and one that seemed to only affect some people. It happened consistently on my machine, but only once in a blue moon to anyone else. This was critical, it had to be fixed not only so that we could develop the game but also because we just couldn’t ship a game with a memory leak in it.

For weeks, I could not find a cause. At first I thought it was my computer, but even when I got a new one up and running, somehow the bug followed me. Was I cursed?

We ended up giving our final presentation of the semester on another teammate's machine. I was deeply embarrassed; there were so many things that I wanted to get in before we left for break, so many things I knew I was capable of doing if this bug hadn't shown up.

Coming back from winter break with a fresh 32 gigabyte stick of RAM stuck in the machine, I was ready to get back to it. This bug had stolen weeks of development time and I was going to nail down where it came from. I jumped forward and back in the development repo, opened up support threads on the Unity forums, filled my Google search history with every permutation of “Why the hell is Unity eating up all my RAM?” It didn’t help that the debugger didn’t find anything, and running visual studio ate up more RAM which caused it to crash faster.

Making a fresh project with nothing installed didn’t have the crash or the RAM usage, so it wasn’t an engine-level bug. It was something we did… and when I found the cause, I was equal parts relieved and mortified about where it came from.

To make the graphics for the grappling hook rope, Andrew made an object pool of cylindrical segments; that way there could be an intricate texture on the rope without having to do any UV math or deal with stretching. It wasn’t a particularly efficient solution, but the game could handle it and since only the player used grappling hooks it was fine to be a little inefficient in exchange for a solution that could be perfected in an afternoon. Playtesters repeatedly requested a bow and arrow, and so when we made the prototype for it we used the same tech that the grappling hook used for the bowstring.

The bug in action

The bowstring used its own physics approximation tied to framerate, rather than Unity’s built in physics. This worked perfectly fine, but it meant when the delta time was too large or too small there could be problems with floating point imprecision and NaN propagation. Whenever my computer would lock up, the last thing I would see would be the bowstring shooting off into infinity from the dragon’s saddle… but I assumed this was a symptom of the problem, and not the cause. What was actually happening was that at level start, too many scripts would be firing their Awake() function, each one having a GameObject.find() function that was costly and slow. This would cause a large lag spike that most players didn’t notice because they were still putting on the headset. The bowstring always started at 0,0,0 because of a programming oversight, but it would snap back into place within that lag spike and we never noticed it because the bow was out of frame. Combining that oversight with the lag spike causing an infinity to show up in the bow’s velocity caused the string to shoot off towards the horizon at level start. The string would then request from the object pool that infinite cylinder segments be spawned to create the visual for this infinite bowstring. The operating system dutifully obeyed this request and allocated one hundred gigabytes of virtual memory, started paging, causing more lag, causing more bad math, causing more segments to be requested, and poof. Consistent blue-screen.

The bug didn’t happen on anyone else’s machines because their higher spec processors meant they never experienced the lag spike and therefore their bowstring bug only ever requested a reasonable number of segments from the object pool. The solution was simple: tweak the bowstring’s physics so it was no longer starting at the world origin, and cap the size of the object pool. Two lines of code and the bug that had stolen weeks of the team’s time was gone. The memory leak wasn’t in the Unity engine, it was something our own oversight had created.

With that out of the way we could finally get back to actually making the game. With the advice from our game professors, we tried to cut back on features that had crept in earlier. Initially we had wanted a more in-depth item system where the player would search around the environment for weapon upgrades, encouraging exploration similar to the Korok seeds in Breath of the Wild. This system had to be cut, even when substantial work had been put into it. We had all the tech, but with no dedicated designers we didn’t have the bandwidth to implement it in a satisfying way. Andrew and I, who both were pursuing Computer Science and Game Design degrees (as opposed to the other three who were all in the Real Time Interactive Simulation track) had to split our design duties with our tech duties. Despite my best efforts to hire more people, we only had two halves of a designer, and that designer’s plate was full.

I worked and iterated on the boss fight while Andrew helped support the enemy and weapon teams. Despite having no formal design training, Nuni really stepped up when it came to designing and implementing the weapon system, giving tons of exposed values to tweak in the editor to dial in every aspect of the game’s gunplay. Joon implemented three in interesting enemy types, as well as locating good asset store models for them and tweaking the meshes to match our art style. Rex helped me through the worst of the bug fixing and used his physics and linear algebra skills to help patch the holes in my boss fight code, as well as implementing the turret target leading math for the boss as well.

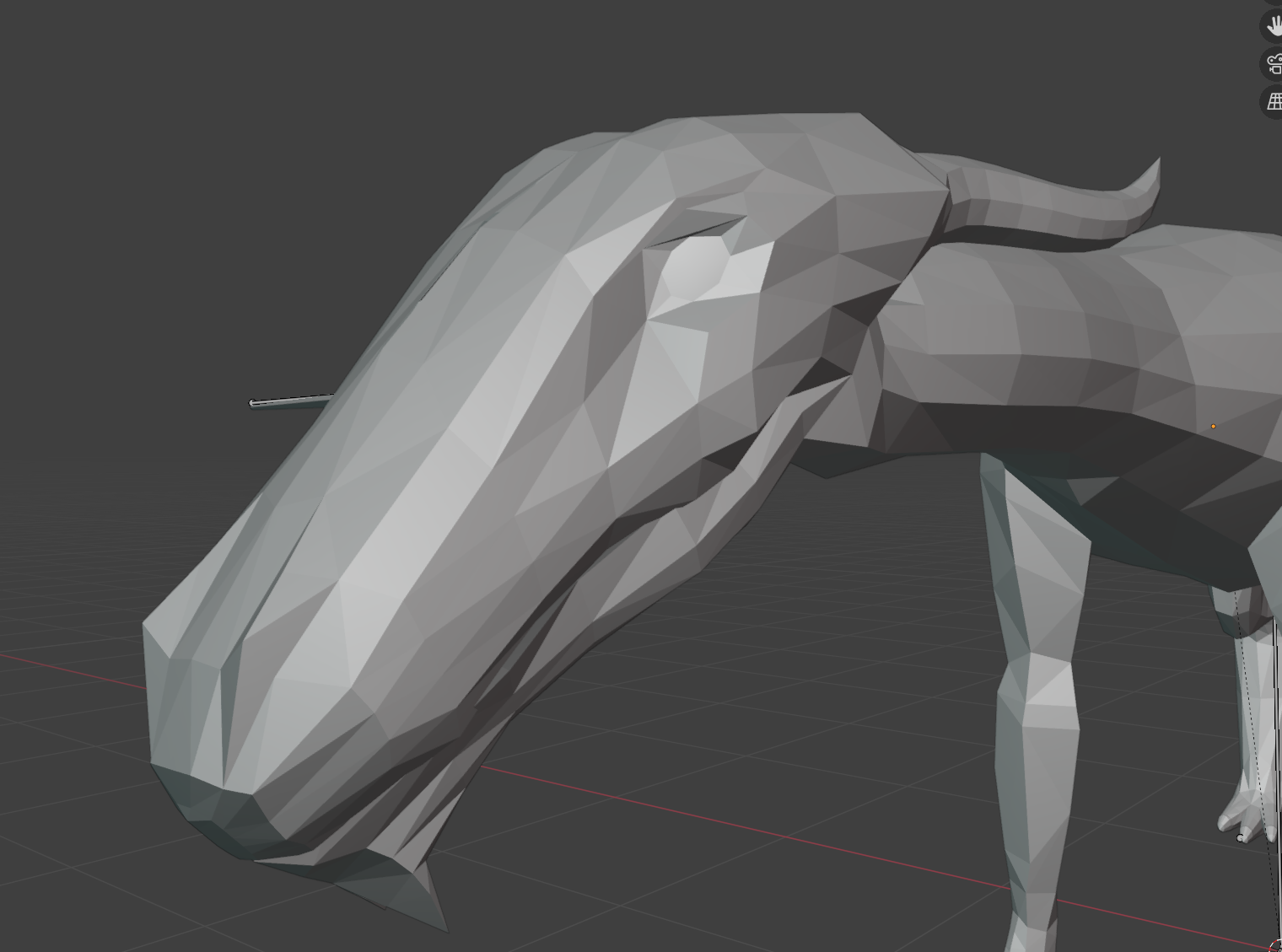

The second semester was when the game really started to take shape. We narrowed the scope, started to do some visuals and level design, and actually ended up with some fully playable action blocks. Tickets were drying up on the Trello list, playtest feedback was rolling in, and I was able to find some free time to teach myself Blender and do a pass on the graphics.

Weight painting woes

Things weren’t perfect, though. The ugly head of our technical debt started to show itself. Lighting would break unless we loaded scenes in a specific order. The way we introduced additive loading caused race conditions leading to null reference exceptions when objects weren’t able to find the player on scene start. We implemented floating origin to combat a specific issue with our fog shader, which required a rewrite of dozens of other systems that were expecting static coordinates. As I started taking the boss fight to playtests, cracks started to show. Code I had written for obstacle avoidance wasn’t working, causing the dragon to fly straight into obstacles and get stuck. Disabling the boss’s collision with the environment was a band-aid solution, and still had the problem of players losing track of their enemy when he clipped through a wall. What’s more, one of the key behaviors I had made for the boss just wasn’t resonating with players. The boss could sometimes do a charged snipe shot that would always hit the player to knock them out of their saddle. It could not be dodged, so the player needed to break line of sight to break target lock. It was fun for me when I played it, forcing me to vary my strategy rather than just hitting the boss with all aggression all the time. Playtesters hated it. They couldn’t understand what was going on and none of them saw the big red laser sight as an indication that they should hide. I tried adding vocal barks, tried extending the time between lock and shoot, but nothing I could do could get players to understand what was happening. This forced me to take a step back and re-evaluate how the boss would work. While that behavior remains in the final build of the game, it no longer stands alone as the boss’s only special attack. I reused homing missile tech that Joon made for one of his enemies to provide an alternate attack so that the snipe behavior would happen less often, and I also added a check to make it only happen when the boss was visible on screen. In addition, I added a system for the boss to detect when it was out of the player’s view and teleport behind them before flying fast, which would make it more likely that players struggling to track the boss in 3d space would always be seeing him and fighting with him. While I couldn’t make players understand how the snipe behavior worked, I could make the impacts of being hit by it a few times less impactful. By adding more things for the player to do, like fight additionally spawning enemies, and adding those checks that needed to be met before the boss could enter that state, I turned it into a rare showpiece moment rather than something that happens constantly. When it appears now, most players are hit by it, and forced to grapple around the arena to get back to their dragon, but when surveyed most thought it was something scripted and enjoyed the chance to shake up their gameplay, completely unaware that it was avoidable in the first place. If I’d had more time I would have kept iterating on the feature… but the end of our time on the project was approaching fast.

The original sketch for the dragon's head

Overly hard shadows were a persistent bug that kept getting reintroduced

But with better shaders and time the look of the game started to come together

Senior year game projects unofficially end at week 10. That’s when the Game Developers Conference happens, and the assumption is that after that teams will be too busy building their portfolio, applying to jobs, and studying for finals in other classes to focus on this one game project. I had tickets to GDC and I wanted to show off my game to people there, which meant we all had to scramble to get the game running standalone on a Quest 3. While we did eventually get that working, I was left burned out before my plane’s wheels even touched down in San Francisco. I met some very cool people at GDC, and was even able to show it to some VR focused studios and YouTubers, but I think my general exhaustion from the scramble to get it ready was incredibly detrimental in other areas when it came time to be social and network.

Coming back from GDC, it was time to address the feedback and get it ready for a final build… and that meant finally addressing our technical debt.

Over the five months we’d already sunk into the project, there were countless “we’ll fix it later” sections in our code… Well, now it was later, and they had to be fixed. The game had to be playable from start to finish, had to be stable, had to have real level design, and oh-so-many minor bugs and features tied to debug keys had to be fixed. I’m proud of what we finally delivered, but even that isn’t particularly stable. In the last five weeks I had plenty of time to work on the project, since I was only taking three classes. Everyone else had tough do-or-die final projects to work on and tests to study for. We just kept going until suddenly we were all standing together in our caps and gowns.

Sometimes I find myself waking up in the morning ready to bike to school to fill out some more tickets on the Trello, and then I remember I’ve graduated and now there’s a different set of students at my team space. It’s a bittersweet feeling. I’m so proud of what we made, but there are still so many things that I think we could fix if we could work more on it. For all the frustrating bugs and constant disagreements over design, I still think that working on this project with these people was one of the best experiences of my life. I will treasure the development process of Breath of the Sky forever.

Showing off the game at the DigiPen playfest. One of the last times we got to meet as a team

In Conlusion:

Where did we succeed?

Where did we fail?

Thank you for reading this far